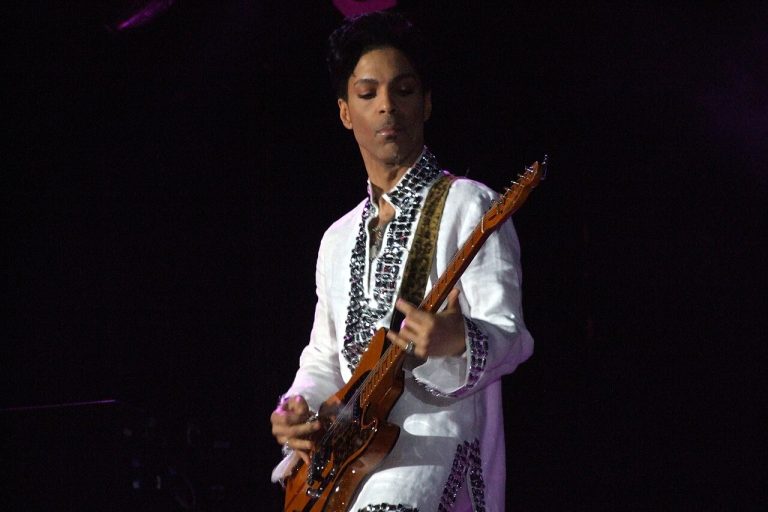

(Photo by Imtiyaz Ali)

As companies push more software decisions through machine learning, a quiet problem has emerged: the infrastructure is often asked to do two very different jobs. At Spotify, the solution wasn’t better model tuning—it was a complete architectural separation between Personalisation and Experimentation.

The Conflict of Interest

Early in Spotify’s journey, these two systems were tightly linked. However, this coupling created a fundamental tension:

- Personalisation Systems demand speed, low latency, and high stability. They answer live requests where any delay is visible to millions of users.

- Experimentation Systems demand flexibility, accuracy, and the ability to accept failure as part of learning.

By splitting these into distinct systems with clear boundaries, Spotify has ensured that a flawed experiment no longer risks production outages, and production spikes don’t invalidate weeks of experimental data.

The “Blast Radius” and the AI Era

In the current AI era, recommendation systems have grown increasingly complex and harder to reason about. Small changes can have wide, unexpected effects. Spotify’s response is to slow down decision-making without slowing down delivery.

“The goal is to catch issues early, when they are cheaper to fix and easier to explain.”

By creating a separate evaluation path, models are reviewed and debated before they are ever served at scale. This creates a clear record of how decisions were made—a vital asset when teams need to revisit past choices or justify a shift in user behavior.

Platform Engineering as the Hero

The standout lesson from Spotify’s approach is that the primary challenge of scaling AI isn’t the model itself; it is coordination.

- Platform Engineering is the system that decides how ideas move through an organization.

- Success requires shared tooling for logging and review, as well as the patience to let measurement guide the way.

The Practical Takeaway

Infrastructure shapes behavior. While most companies don’t operate at Spotify’s scale, the lesson remains: separating experimentation from serving creates the friction necessary to ask if a system is actually doing what it was meant to do. In the push to scale AI, designing systems that favor learning over raw speed may be the most practical strategy for long-term success.